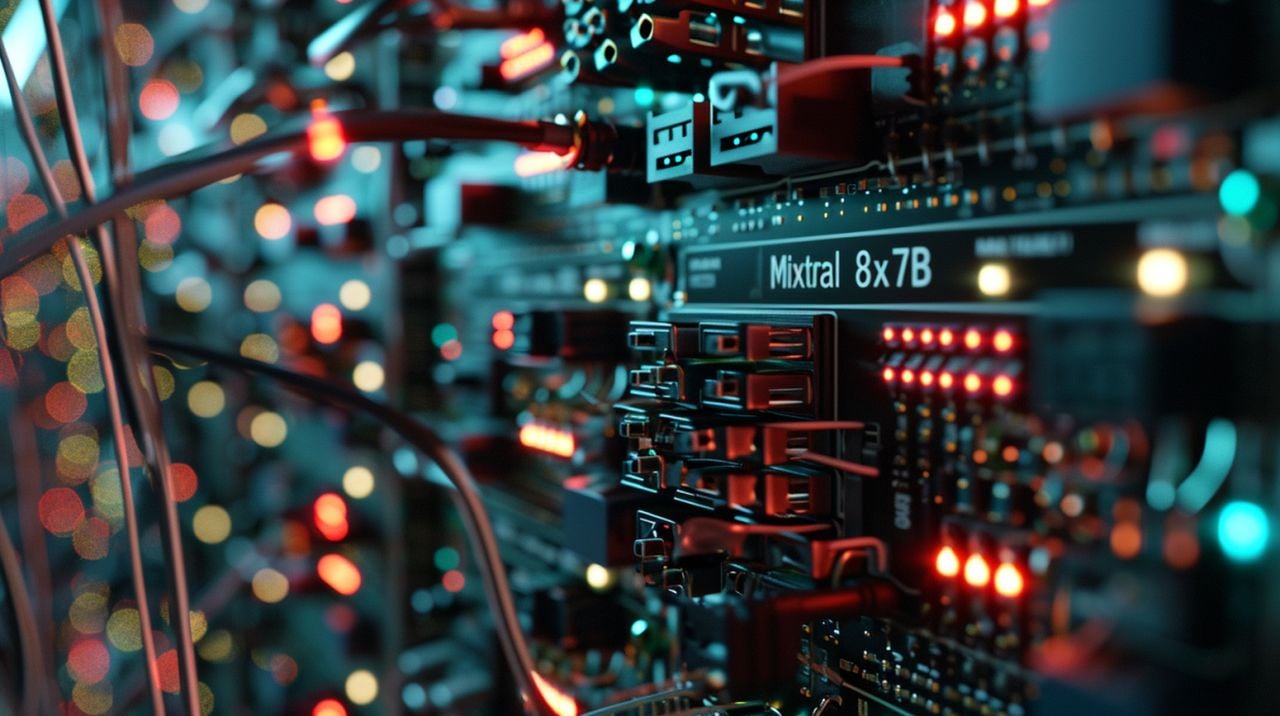

Uma kuziwa ekuthuthukiseni amakhono e-Mixtral 8x7B, imodeli yezobunhloli yokwenziwa enamapharamitha ayizigidi eziyizinkulungwane ezingama-87, umsebenzi ungabonakala unzima. Le modeli, ewela esigabeni sezingxube zochwepheshe (MoE), igqama ngokusebenza kwayo kanye nekhwalithi yemiphumela yayo. Iqhudelana namamodeli afana ne-GPT-4 futhi ikhonjiswe ukuthi idlula i-LLaMA 270B kwamanye amabhentshimakhi okusebenza. Lesi sihloko sizokuqondisa kunqubo yokulungisa kahle i-Mixtral 8x7B ukuze ihlangabezane ngokunembile nezidingo zemisebenzi yakho yekhompyutha.

Kubalulekile ukuqonda ukuthi i-Mixtral 8x7B isebenza kanjani. Isebenza ngokuqondisa umzila “kuchwepheshe” ofaneleke kakhulu ngaphakathi kwesistimu yayo, njengeqembu lochwepheshe ngamunye ophethe isizinda sakhe. Le ndlela ithuthukisa kakhulu ukusebenza kahle kokucubungula kwemodeli kanye nekhwalithi yemiphumela yayo. I-Mixtral-8x7B Large Language Model (LLM) iyingxube ekhiqizayo eqeqeshwe kusengaphambili yochwepheshe abambalwa futhi idlula i-LLaMA 270B kumabhentshimakhi amaningi.

Ukuthuthukiswa kwemodeli ye-Mixtral 8x7B AI

Ukuqala inqubo yokushuna kahle, kubalulekile ukusungula indawo eqinile ye-GPU. Ukulungiselelwa okunama-GPU okungenani angu-4 T4 kuyanconywa ukuze kulawuleke kahle izidingo zekhompyutha zemodeli. Lokhu kusetha kuzosiza ukucutshungulwa kwedatha okusheshayo nangempumelelo, okubalulekile enqubweni yokuthuthukisa.

Uma kubhekwa usayizi omkhulu wemodeli, ukusetshenziswa kwamasu afana ne-quantization kanye nezimo ezisezingeni eliphansi (LURA) kubalulekile. Lezi zindlela zivumela imodeli ukuthi incishwe, ngaleyo ndlela inciphise umkhondo wayo ngaphandle kokudela ukusebenza. Kufana nokulungisa umshini ukuze usebenze kahle kakhulu.

Kulesi sibonelo, isethi yedatha ye-Vigo idlala indima ebalulekile enqubweni yokuthuthukisa. Inikeza uhlobo oluthile lokukhiphayo olubalulekile ekuhloleni nasekucwengisiseni ukusebenza kwemodeli. Isinyathelo sokuqala siwukulayisha nokufanekisa idatha, ukuqinisekisa ukuthi ubude obukhulu bamatrices bedatha bufana nezidingo zemodeli.

Ukusebenzisa i-LURA ezendlalelo zemodeli kuyisinqumo esinamasu. Yehlisa ngempumelelo inani lamapharamitha aqeqeshekayo, okunciphisa ukuqina kwensiza edingekayo futhi kusheshise inqubo yokushuna. Lokhu kuyisici esibalulekile ekuphatheni izicelo zokubala zemodeli.

Ukuqeqesha i-Mixtral 8x7B kuhlanganisa ukusetha izindawo zokuhlola, ukulungisa amazinga okufunda, nokusebenzisa ukuqapha ukuze kugwenywe ukulungisa ngokweqile. Lezi zinyathelo zibalulekile ukuze kube lula ukufunda okuphumelelayo kanye nokuqinisekisa ukuthi imodeli ayifakwa eduze kakhulu nedatha yokuqeqeshwa.

Uma imodeli isicwengisisiwe, kubalulekile ukuhlola ukusebenza kwayo kusetshenziswa idathasethi ye-Vigo. Lokhu kuhlola kuzokusiza ukuthi unqume ukuthi yikuphi ukuthuthukiswa okwenziwe futhi uqinisekise ukuthi imodeli isilungele ukuthunyelwa.

Ukuxhumana nomphakathi we-AI ngokwabelana ngenqubekelaphambili yakho kanye nokufuna impendulo kunganikeza imininingwane ebalulekile futhi kuholele ekuthuthukisweni okwengeziwe. Izinkundla ezifana ne-YouTube zinhle kakhulu ekukhuthazeni lolu hlobo lokuxhumana nezingxoxo.

Ukulungiselela i-Mixtral 8x7B kuyinqubo ecophelelayo nezuzisayo. Ngokulandela lezi zinyathelo futhi ucabangele izidingo zekhompyutha zemodeli, ungathuthukisa kakhulu ukusebenza kwayo kwezinhlelo zakho zokusebenza ezithile. Lokhu kuzokunikeza ithuluzi le-AI elisebenza kahle nelisebenza ngempumelelo elingaphatha kalula imisebenzi eyinkimbinkimbi.

Funda kabanzi Umhlahlandlela:

- I-Mixtral 8x22B MoE entsha, imodeli yolimi lomthombo ovulekile onamandla (LLM)

- Ungayifaka kanjani imodeli ye-Mixtral AI engahloliwe endaweni futhi mahhala?

- Ihlola ukusebenza okumangalisayo kwe-AI Agent Mixtral 8X7B

- Uyicwenga kanjani imodeli ye-Mixtral yomthombo ovulekile we-AI

- Uchwepheshe we-Mistral AI Mixtral 8x7B ohlanganisa imodeli yobuhlakani bokwenziwa kuye kwavezwa amabhentshimakhi ahlaba umxhwele

- Isebenzisa i-Llama 2 endaweni ku-Apple M3 Silicon Macs